Showing

- doc/images/fnirt_warp_field.xcf 0 additions, 0 deletionsdoc/images/fnirt_warp_field.xcf

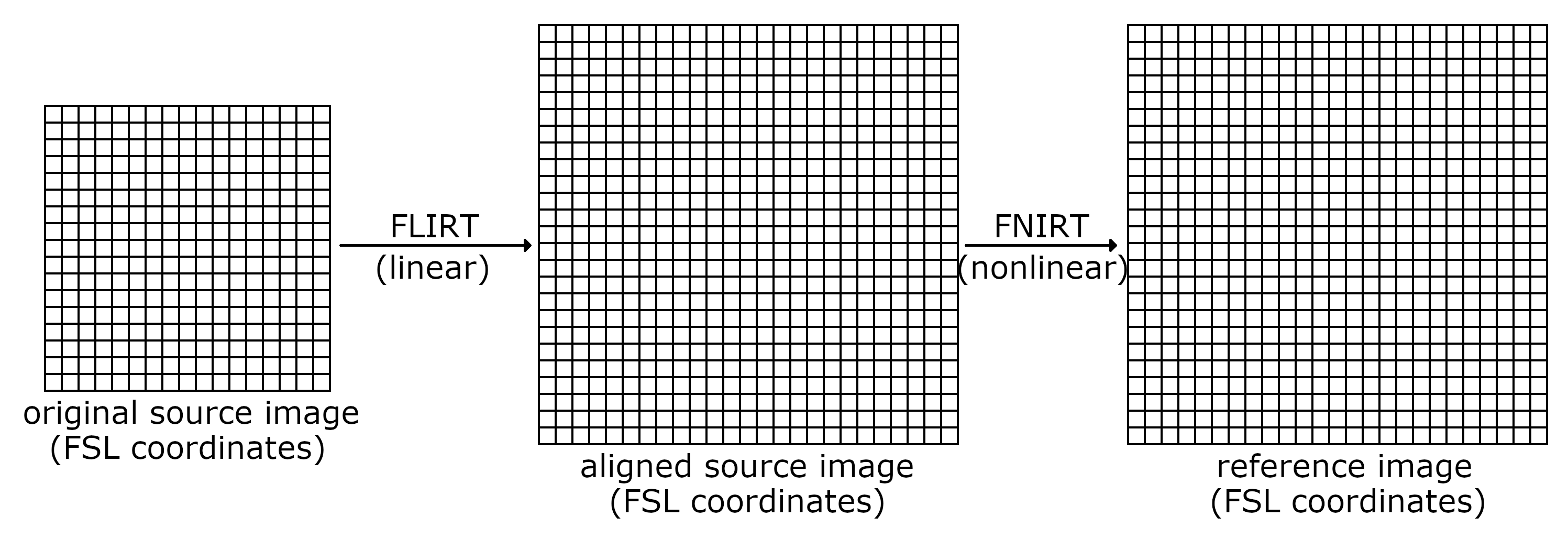

- doc/images/nonlinear_registration_process.png 0 additions, 0 deletionsdoc/images/nonlinear_registration_process.png

- doc/images/nonlinear_registration_process.xcf 0 additions, 0 deletionsdoc/images/nonlinear_registration_process.xcf

- doc/index.rst 47 additions, 2 deletionsdoc/index.rst

- doc/mock_modules.txt 5 additions, 0 deletionsdoc/mock_modules.txt

- fsl/__init__.py 0 additions, 25 deletionsfsl/__init__.py

- fsl/data/atlases.py 211 additions, 80 deletionsfsl/data/atlases.py

- fsl/data/bitmap.py 186 additions, 0 deletionsfsl/data/bitmap.py

- fsl/data/cifti.py 493 additions, 0 deletionsfsl/data/cifti.py

- fsl/data/constants.py 39 additions, 1 deletionfsl/data/constants.py

- fsl/data/dicom.py 161 additions, 61 deletionsfsl/data/dicom.py

- fsl/data/featanalysis.py 192 additions, 91 deletionsfsl/data/featanalysis.py

- fsl/data/featdesign.py 15 additions, 18 deletionsfsl/data/featdesign.py

- fsl/data/featimage.py 72 additions, 34 deletionsfsl/data/featimage.py

- fsl/data/fixlabels.py 241 additions, 128 deletionsfsl/data/fixlabels.py

- fsl/data/freesurfer.py 4 additions, 2 deletionsfsl/data/freesurfer.py

- fsl/data/gifti.py 136 additions, 39 deletionsfsl/data/gifti.py

- fsl/data/image.py 1173 additions, 473 deletionsfsl/data/image.py

- fsl/data/imagewrapper.py 104 additions, 155 deletionsfsl/data/imagewrapper.py

- fsl/data/melodicanalysis.py 24 additions, 20 deletionsfsl/data/melodicanalysis.py

Some changes are not shown.

For a faster browsing experience, only 20 of 1000+ files are shown.

doc/images/fnirt_warp_field.xcf

0 → 100644

File added

66.3 KiB

File added

fsl/__init__.py

deleted

100644 → 0

fsl/data/bitmap.py

0 → 100644

fsl/data/cifti.py

0 → 100644