Merge branch 'master' into 'master'

Master See merge request fsl/pytreat-2018-practicals!45

No related branches found

No related tags found

Showing

- talks/tensorflow/.gitkeep 0 additions, 0 deletionstalks/tensorflow/.gitkeep

- talks/tensorflow/demos/.gitkeep 0 additions, 0 deletionstalks/tensorflow/demos/.gitkeep

- talks/tensorflow/demos/convolutional_network_demo.ipynb 435 additions, 0 deletionstalks/tensorflow/demos/convolutional_network_demo.ipynb

- talks/tensorflow/demos/labels_1024.tsv 1024 additions, 0 deletionstalks/tensorflow/demos/labels_1024.tsv

- talks/tensorflow/demos/sprite_1024.png 0 additions, 0 deletionstalks/tensorflow/demos/sprite_1024.png

- talks/tensorflow/demos/tensorboard_demo.ipynb 223 additions, 0 deletionstalks/tensorflow/demos/tensorboard_demo.ipynb

- talks/tensorflow/img/.gitkeep 0 additions, 0 deletionstalks/tensorflow/img/.gitkeep

- talks/tensorflow/img/bigger_boat.jpg 0 additions, 0 deletionstalks/tensorflow/img/bigger_boat.jpg

- talks/tensorflow/img/cg/.gitkeep 0 additions, 0 deletionstalks/tensorflow/img/cg/.gitkeep

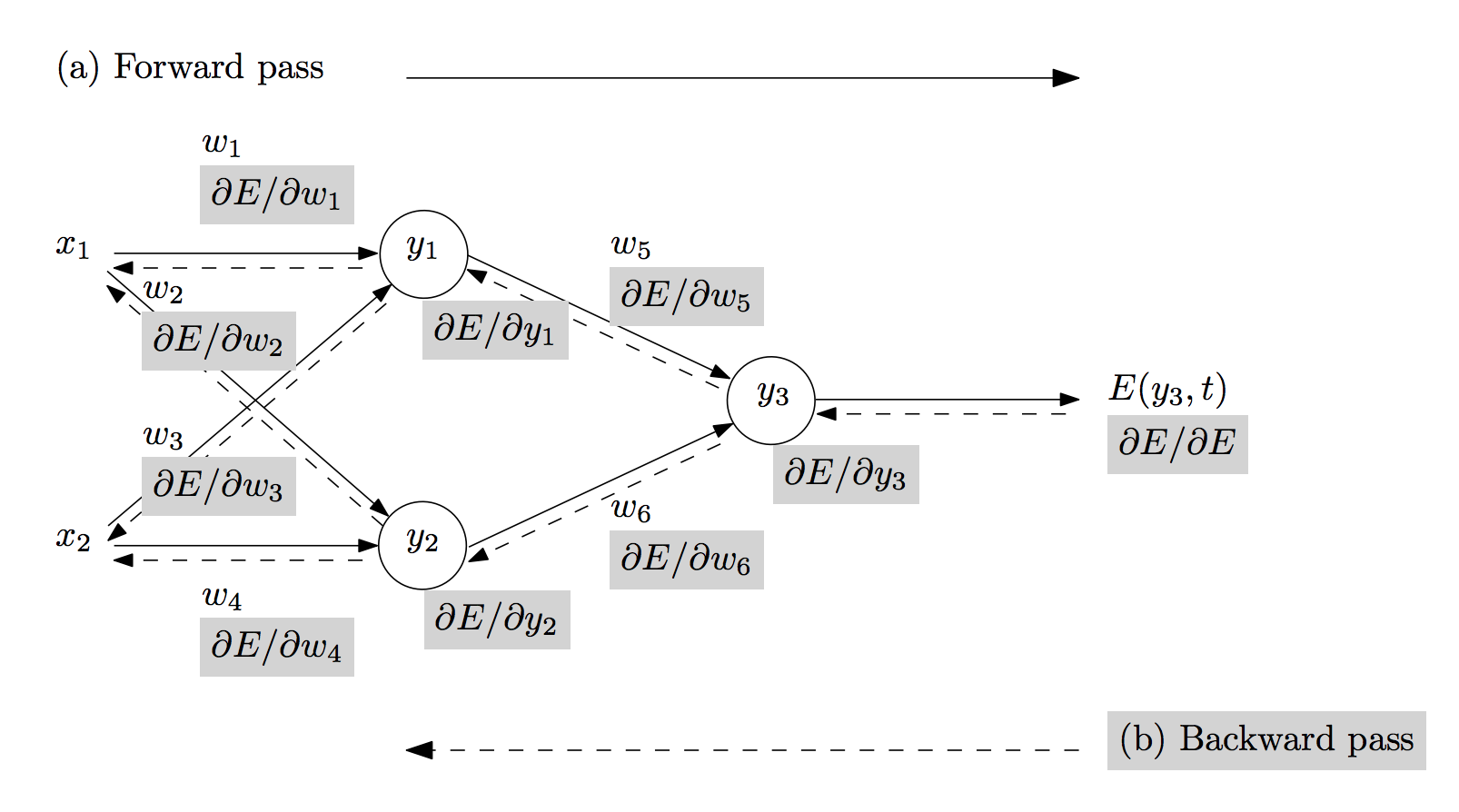

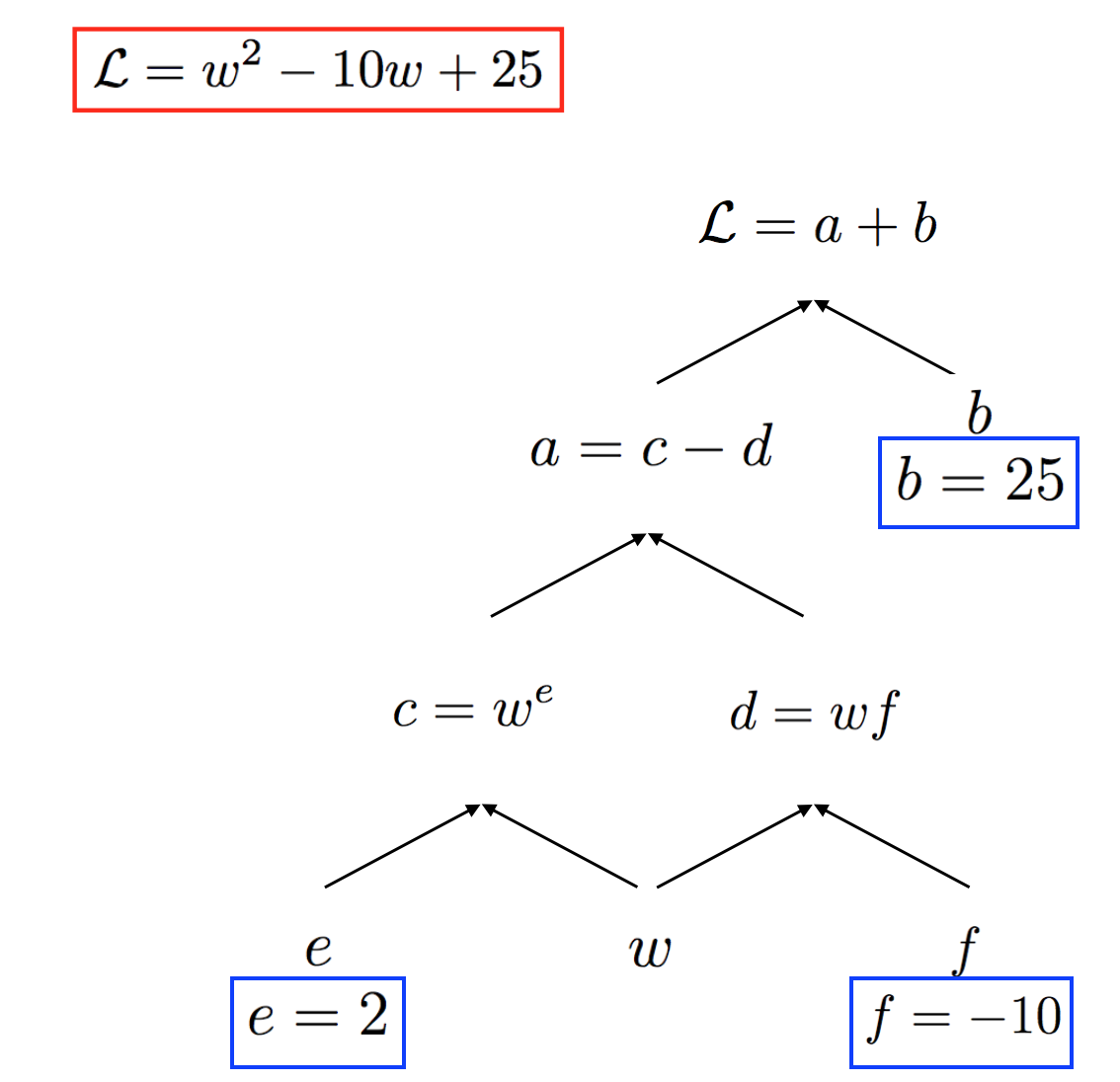

- talks/tensorflow/img/cg/ad.png 0 additions, 0 deletionstalks/tensorflow/img/cg/ad.png

- talks/tensorflow/img/cg/cg1.png 0 additions, 0 deletionstalks/tensorflow/img/cg/cg1.png

- talks/tensorflow/img/copyright_infringement.png 0 additions, 0 deletionstalks/tensorflow/img/copyright_infringement.png

- talks/tensorflow/img/demos/.gitkeep 0 additions, 0 deletionstalks/tensorflow/img/demos/.gitkeep

- talks/tensorflow/img/demos/nothing_here 0 additions, 0 deletionstalks/tensorflow/img/demos/nothing_here

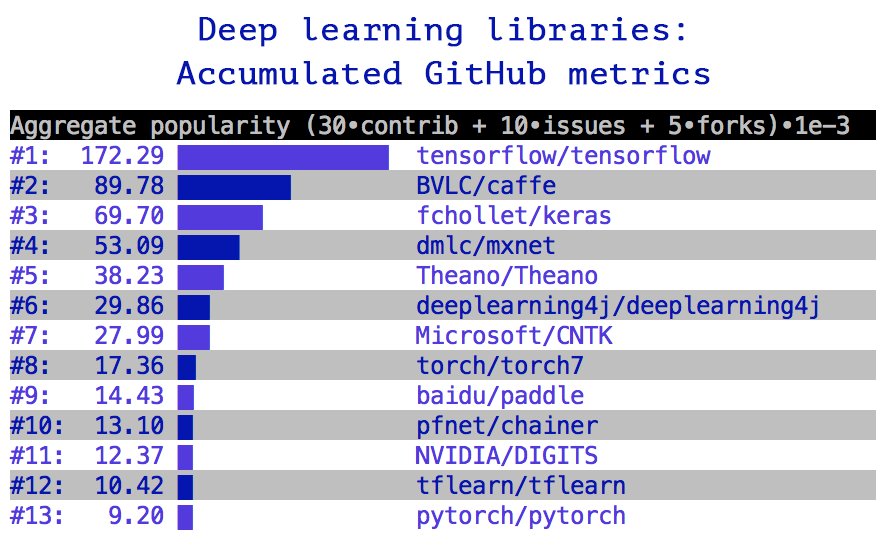

- talks/tensorflow/img/fchollet_popularity_2017.jpg 0 additions, 0 deletionstalks/tensorflow/img/fchollet_popularity_2017.jpg

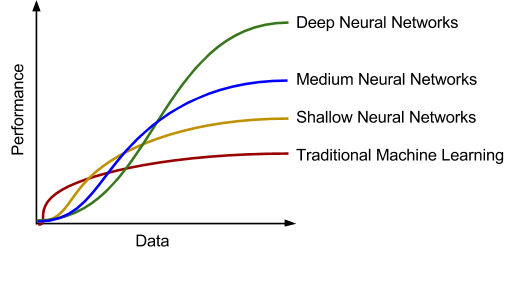

- talks/tensorflow/img/how_big_is_your_data.png 0 additions, 0 deletionstalks/tensorflow/img/how_big_is_your_data.png

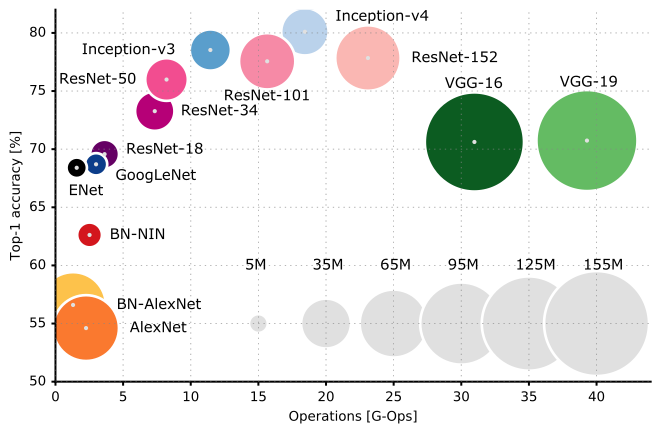

- talks/tensorflow/img/model_zoo.png 0 additions, 0 deletionstalks/tensorflow/img/model_zoo.png

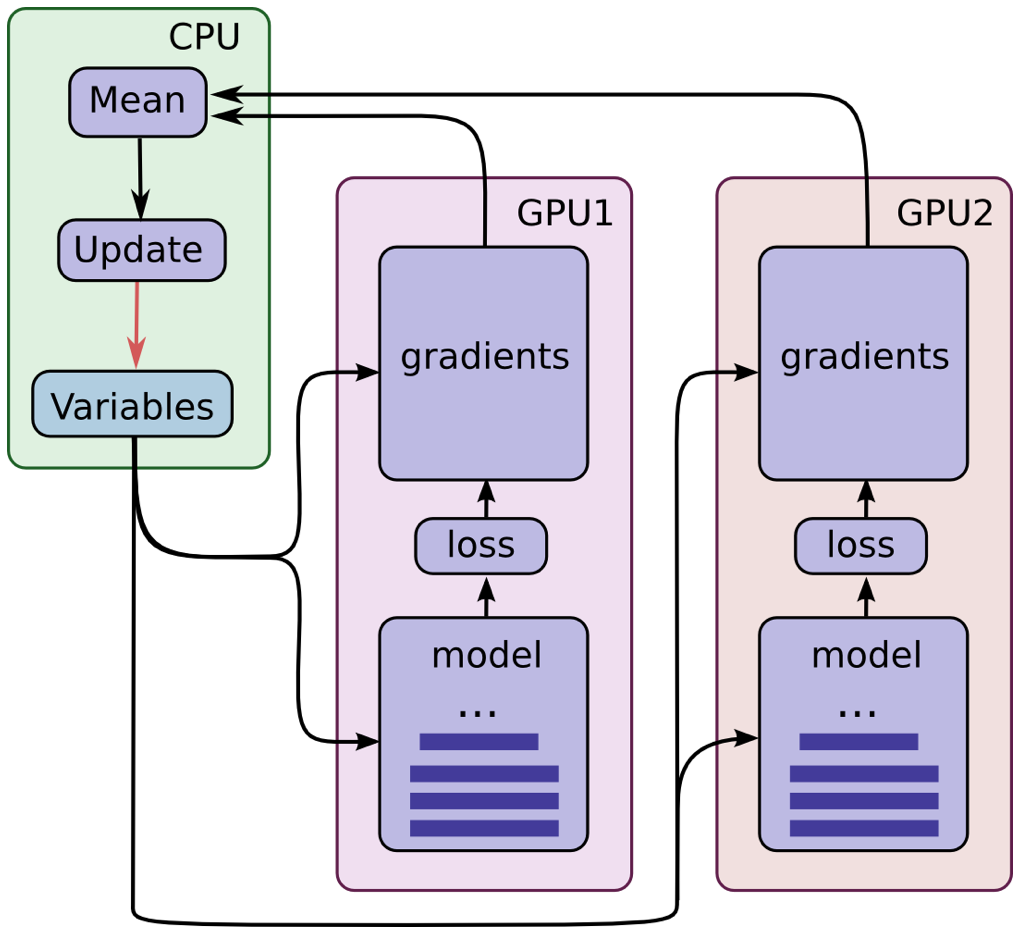

- talks/tensorflow/img/multiple_devices.png 0 additions, 0 deletionstalks/tensorflow/img/multiple_devices.png

- talks/tensorflow/img/so_hot_right_now.jpg 0 additions, 0 deletionstalks/tensorflow/img/so_hot_right_now.jpg

- talks/tensorflow/img/what_leo_needs.png 0 additions, 0 deletionstalks/tensorflow/img/what_leo_needs.png

talks/tensorflow/.gitkeep

0 → 100644

talks/tensorflow/demos/.gitkeep

0 → 100644

talks/tensorflow/demos/labels_1024.tsv

0 → 100644

talks/tensorflow/demos/sprite_1024.png

0 → 100644

169 KiB

talks/tensorflow/img/.gitkeep

0 → 100644

talks/tensorflow/img/bigger_boat.jpg

0 → 100644

77.3 KiB

talks/tensorflow/img/cg/.gitkeep

0 → 100644

talks/tensorflow/img/cg/ad.png

0 → 100644

156 KiB

talks/tensorflow/img/cg/cg1.png

0 → 100644

113 KiB

419 KiB

talks/tensorflow/img/demos/.gitkeep

0 → 100644

talks/tensorflow/img/demos/nothing_here

0 → 100644

91.9 KiB

16.1 KiB

talks/tensorflow/img/model_zoo.png

0 → 100644

62.7 KiB

talks/tensorflow/img/multiple_devices.png

0 → 100644

151 KiB

talks/tensorflow/img/so_hot_right_now.jpg

0 → 100644

78.7 KiB

talks/tensorflow/img/what_leo_needs.png

0 → 100644

280 KiB